- AI’s Environmental Impact: The average carbon footprint for an AI query is 0.03-1.14 grams CO₂e.

- Efficiency Gap: Google (0.24 Wh) and ChatGPT (0.34 Wh) are far more efficient per query than dense models like Mistral (1.14g CO₂e).

- Reasoning Spike: Reasoning models use significantly more energy (estimates range from 50x to 100x) of standard queries.

- Usage is growing: Data center power usage jumped 72% from 2019 to 2023.

- Water cost: Average data centers directly consume 1,900 litres of water per MWh for cooling, plus an additional 4,540 litres per MWh for indirect electricity generation (EESI)

- Scope 3 matters: AI hardware emissions fall under Scope 3 Category 1 for businesses.

- Inference is key: Over 80% of AI electricity consumption comes from the use phase (inference) and not training.

AI’s Environmental Impact: A Full Lifecycle Analysis

We know you might be tempted to hit that "Summarize with ChatGPT" button at the top of this blog.

It is ironic, isn't it? We use AI tools to save time reading about the environmental cost of AI tools.

Large Language Models (LLMs) have fundamentally changed the way we work. We use them to draft emails, write code, and analyze data. Tools like ChatGPT, Gemini, and Claude feel like magic because the answers appear instantly.

But there is a physical reality behind that digital magic.

Every time you prompt a model, a server in a massive data center consumes energy. That energy comes with a price tag.

What is the environmental cost of AI? And how do we measure it accurately?

To understand the impact of AI on the environment, we have to look at the big picture.

Is AI actually bad for the environment?

The short answer is currently yes, but the details matter.

AI isn't inherently "bad," but its current infrastructure is incredibly resource-intensive.

The problem stems from three main factors:

- The energy grid isn't green enough to support the massive power use required by data centers

- Second, the physical hardware needed to run these models involves intensive manufacturing processes

- The water required to cool these servers is straining local resources

So why is AI bad for the environment? Right now, that energy is largely powered by fossil fuels.

As Sam Altman, CEO of OpenAI, recently noted,

“The cost of AI will converge to the cost of energy."

That means the abundance of AI is strictly limited by how much energy we can generate.

If that energy comes from coal or natural gas, the correlation between AI and climate change becomes steeper.

The full lifecycle: measuring AI’s carbon footprint

To truly understand the AI carbon footprint, you have to look beyond the electricity bill. You need a lifecycle approach.

This means looking at everything from the mine where the minerals for the chips were dug up, all the way to the daily operations of the server farm.

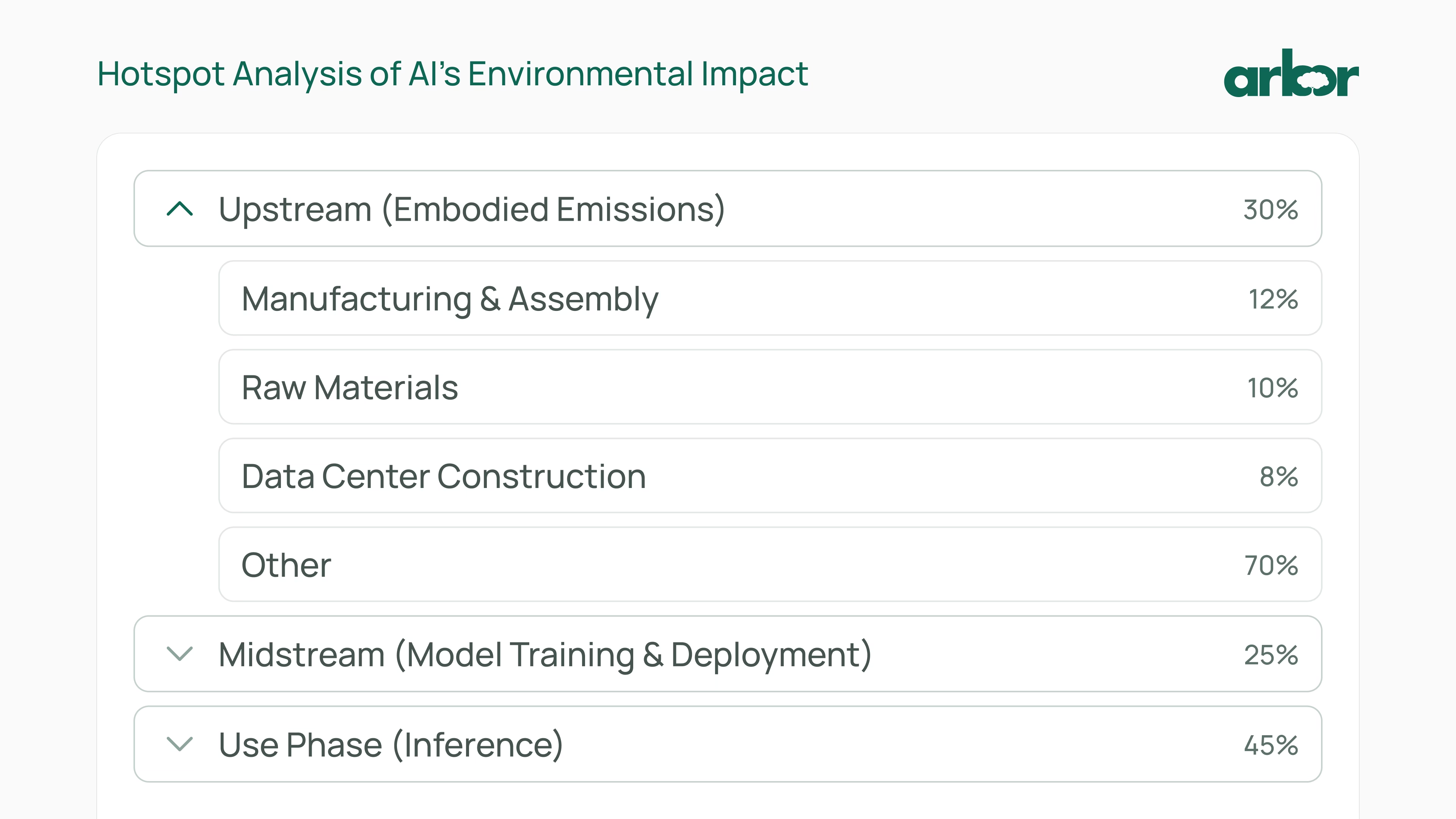

Embodied emissions & Scope 3

For businesses, this is the part that often gets missed in reporting.

Before a data center processes a single byte of data, it has already emitted a massive amount of carbon.

This is called embodied carbon. It includes the extraction of raw materials, the manufacturing of GPUs and servers, and the construction of the data center buildings themselves.

If you are a company licensing AI software or using cloud services (like Azure or AWS), these hardware emissions fall under Scope 3 emissions (specifically Category 1: Purchased Goods and Services).

You might think,

"I didn't buy the server, Amazon did."

But under carbon accounting standards like the GHG Protocol, a portion of that server's embodied carbon belongs to you based on your usage.

This is a critical reporting gap for many enterprises. You cannot claim to be net-zero if you ignore the massive supply chain emissions from your AI compute providers.

Operational emissions: Training vs. Inference

Once the hardware is in place, we look at operational emissions. This is the electricity used to run the servers and the cooling systems.

AI energy consumption is usually broken down into two phases.

- Training: This is the "learning" phase where a model like GPT-4 reads the internet. It is like sending a student to university for a decade. It takes weeks or months of massive computing power.

- Inference: This is the "doing" phase. This happens every time you ask a question.

For a long time, researchers focused on training because it makes for scary headlines. Training GPT-3, for example, consumed 1,287 MWh of electricity (University of Michigan).

However, Schibsted found that AI inference (the use phase) currently accounts for over 80% of total AI electricity consumption.

Training happens once or periodically. Inference happens millions of times a day, every day, forever. This is where the bulk of the carbon footprint of AI models actually lives.

Calculated: The carbon footprint per AI query

The average carbon footprint for an AI query is 0.03-1.14 grams CO₂e.

This is a range between Google Gemini’s (lowest) and Mistral’s Le Chat (highest) carbon footprint per query. Since not all models make their energy consumption data public, these are estimates based on external models. Previously, estimates placed the average carbon impact at 4.32 grams CO₂e per query. More importantly, these are evolving numbers instead of fixed constants.

For one query, the emissions can change drastically based on the number of tokens you consume. Someone asking for the weather would result in far fewer emissions than attaching a 100-page document.

Here is the breakdown of Energy (Wh) and Carbon Dioxide Equivalent (CO₂e) per query for the major players:

ChatGPT by OpenAI

Sam Altman recently disclosed updated figures for standard ChatGPT queries, which are comparable to Google's efficiency.

- Energy: 0.34 Wh per average query

- Carbon: 0.165 grams CO₂e (estimated by Arbor based on US Grid)

Gemini by Google

Google Cloud’s infrastructure is highly optimized, resulting in some of the lowest reported numbers in the industry.

- Energy: 0.24 Wh per median text prompt

- Carbon: 0.03 grams CO₂e

Le Chat by Mistral

Mistral’s "Large 2" model is a dense, high-performance model. Its footprint is significantly higher, likely due to the model's density and less optimization on the inference side compared to the tech giants.

- Energy: Not disclosed (implied >3 Wh)

- Carbon: 1.14 grams CO₂e per 400-token response

Real-world Equivalents

Using the median value from the range above (0.585 grams CO₂e) and the carbon footprint of 1 million messages sent to an AI model, is comparable to these equivalents:

- 2,211,300 km driving in a gasoline truck

- 9,746,100 days of carbon consumption for 1 tree

- 135,720,000 charges of the average smartphone

Calculated using the Carbon Equivalent Calculator.

The "Reasoning" Model Spike

The numbers above are for standard queries. The game changes completely if you use an AI "reasoning model.”

These models "think" before they speak (aka a "chain of thought" process), generating thousands of hidden tokens to check their logic.

- Standard Models: Designed for efficiency, these models (like GPT-4o) predict the next word instantly (“one shot”) without internal reflection, keeping energy costs low.

- Reasoning Models: Conversely, models like OpenAI’s o1 trigger a complex "chain of thought" to verify logic before responding, causing energy costs to skyrocket.

Recent data suggests that advanced reasoning tasks can consume over 33 Wh per long prompt (arxiv). This is up to 50.2x more energy than a standard request (Frontiers).

Using a massive reasoning model for a simple task is like using a sledgehammer to crack a nut. It is a waste of high-carbon energy.

AI’s water footprint: Why data centers are thirsty

Carbon gets all the attention, but water is the silent crisis. Why is AI bad for the environment water water-wise?

Servers generate heat. A lot of it. To keep them from melting down, data centers use massive industrial cooling systems. Many of these systems rely on evaporative cooling, which consumes water to lower temperatures.

The water consumption varies wildly by provider (per query):

- Google Gemini: 0.26 mL

- OpenAI ChatGPT: 0.32 mL (~5 drops)

- Mistral Le Chat: 45 mL

Mistral’s consumption is notably higher, over 140x more water per query than Google or OpenAI. This stark difference highlights how much infrastructure efficiency (cooling systems, location, and hardware) matters.

With ChatGPT alone processing hundreds of millions of responses daily, even at 0.32 mL, the aggregate volume is massive.

But if inefficient models become the norm, the water demand could skyrocket to millions of gallons per day, competing with local agriculture and residential needs.

The central role of data centers

You cannot discuss AI without discussing its home. Are AI data centers bad for the environment?

They are the factories of the 21st century. And like traditional factories, they produce waste and consume vast resources.

Here are some typical environmental demands from data centers:

- Energy Density: AI racks consume far more power per square foot than traditional cloud storage servers

- Grid Strain: The local power grid often has to fire up peaker plants (often coal or gas) to handle the constant, high load of AI facilities

- Land Use: The physical footprint of these centers is expanding, often requiring significant land development

“To truly achieve net-zero, it’s essential to consider the full lifecycle of the data center,”

Says Abdullah Choudhry, Co-Founder of Arbor.

“This means designing data centers with sustainability in mind: selecting low-carbon materials, adopting designs for easier upgrades, and investing in green energy.”

How to reduce AI’s environmental impact

We’re not slowing down on the usage of AI. It is too useful. So the goal regarding the sustainability of AI is to decouple the emissions from the benefits.

Here is how the industry is tackling this to reduce AI energy usage:

1. Optimize software and algorithms

LLM efficiency compounds fast. Language-model algorithms need about 2× less compute every ~8 months (Epoch), which adds up to 10–100× efficiency gains over just a few years.

2. Green the grid

This is the big lever. If the data center runs on solar or wind power, the operational carbon can drop to near zero. Major providers like Google and Microsoft are investing heavily in 24/7 carbon-free energy matching.

3. Strategic location

You can reduce emissions simply by choosing where your computing happens. Training a model in a region with a hydro-heavy grid (like Quebec or Norway) creates far less carbon than training the exact same model in a coal-heavy region (like parts of the US Midwest or Asia).

4. Extend hardware lifespan

Keeping servers in use for just one or two years longer drastically reduces the annualized embodied carbon. It spreads that initial manufacturing cost over more productive hours.

The future of AI and the environment

AI is an amplifier. It amplifies productivity, creativity, and, right now, energy consumption.

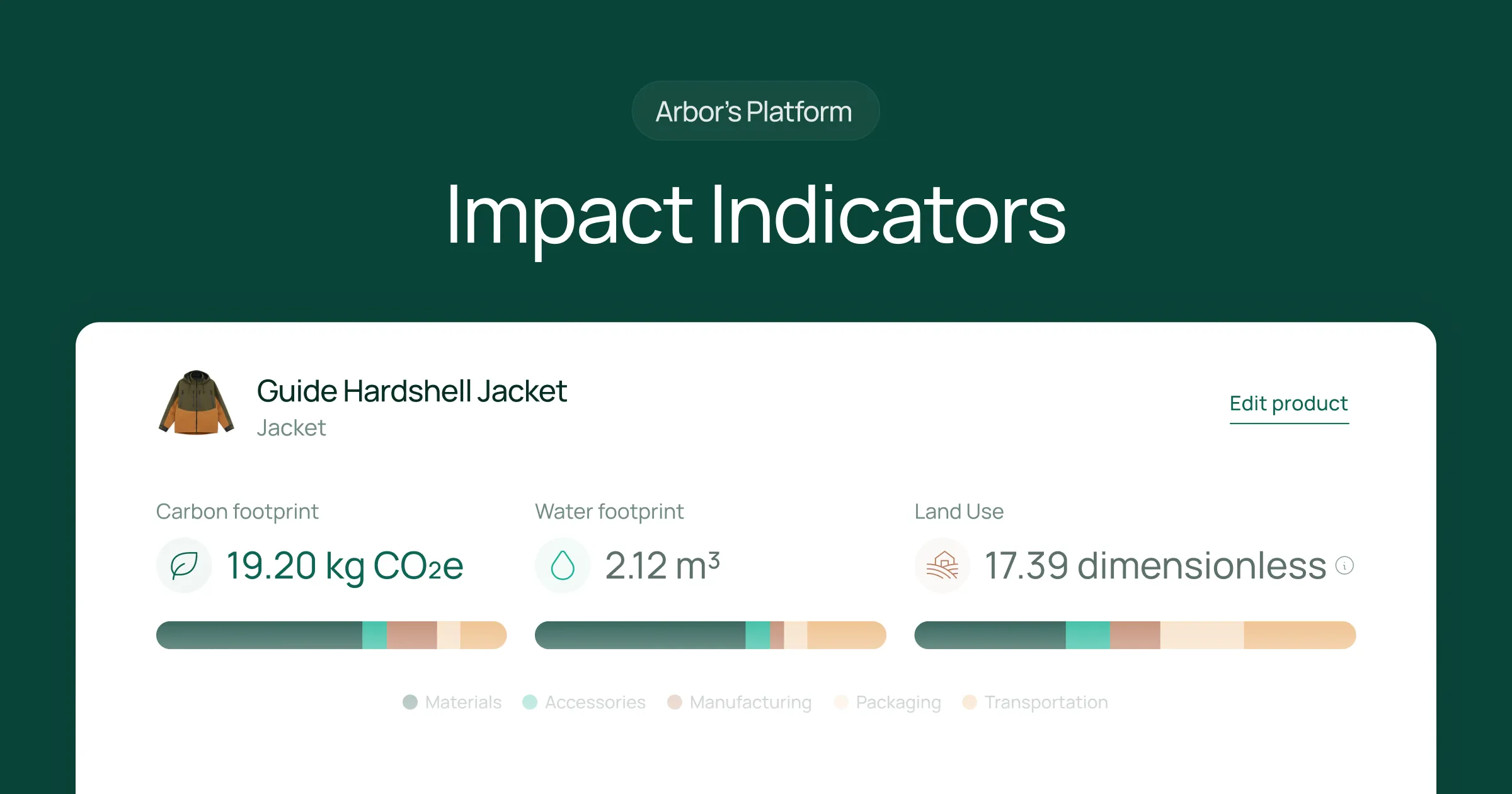

For businesses, the first step isn't to panic. It is to measure. You cannot manage what you do not quantify. You need to understand the Scope 3 impact of your digital tools just as clearly as you understand the impact of your physical logistics.

The future of AI depends on energy. And the future of our planet depends on that energy being clean.

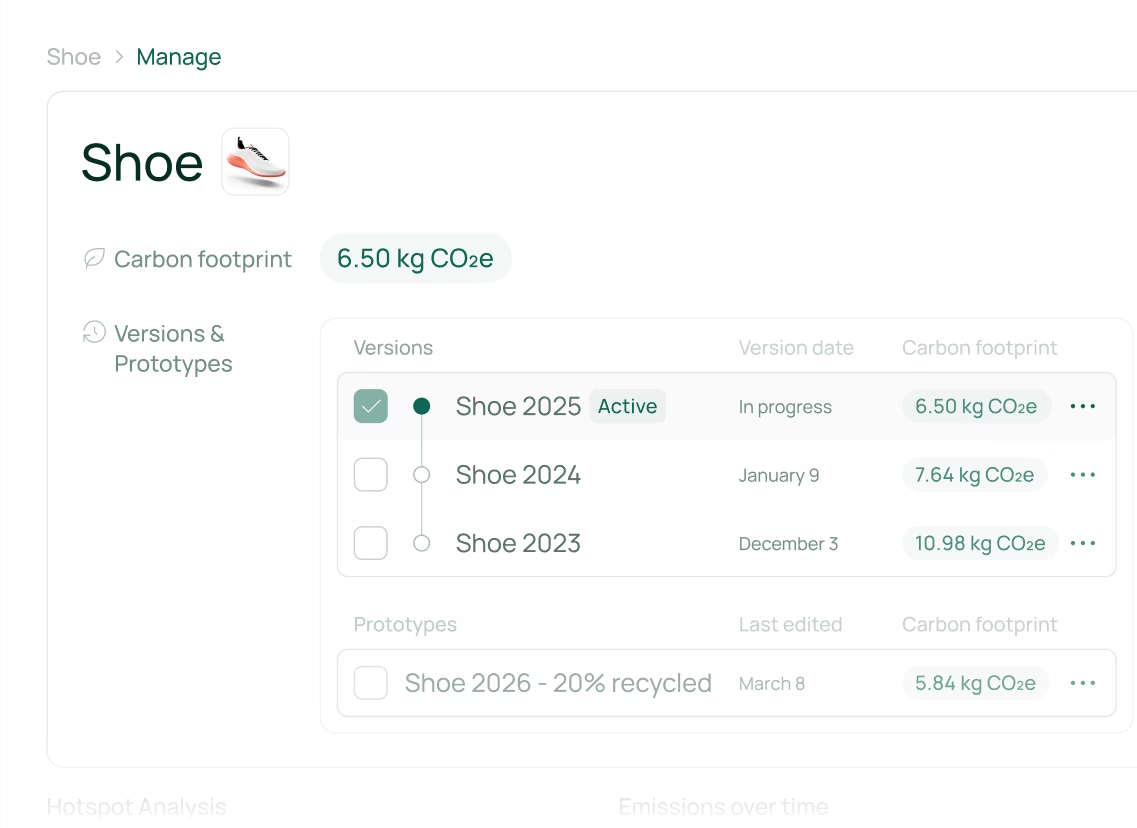

Measure your emissions with Arbor

Measure your carbon emissions with Arbor

Simple, easy carbon accounting.

FAQ about AI’s environmental impact

What is the carbon footprint of a ChatGPT query?

A standard ChatGPT query uses about 0.34 Watt-hours of energy, resulting in approximately 0.03-1.14 grams CO₂e. However, using advanced "reasoning" models (like o1) can increase this impact by 50-100x, creating a much larger footprint.

Is using AI bad for the environment?

It puts significant strain on the environment through high energy consumption, water usage for cooling, and the mining of raw materials for hardware. However, AI also offers tools to optimize energy grids and accelerate climate research, so its net impact depends on how we manage its infrastructure.

How much water do AI data centers use?

It varies by provider. On a macro scale, average data centers consume 1,900 litres of water directly for cooling per megawatt-hour (MWh), while indirect water consumption from electricity generation adds roughly 4,540 litres per MWh (EESI).

What are Scope 3 emissions in relation to AI?

For businesses using AI, the emissions from server manufacturing and upstream energy production fall under Scope 3 Category 1 (Purchased Goods and Services). This is often a "hidden" part of a company's carbon footprint that needs to be accounted for.

How can we reduce AI's environmental impact?

We can lower emissions by powering data centers with renewable energy, avoiding "reasoning" models for simple tasks, extending the lifespan of hardware, and locating servers in cooler climates or regions with greener power grids.

Is AI increasing carbon emissions?

AI does contribute to increased carbon emissions due to the energy-intensive processes involved, particularly in training large models. Companies like Google and Microsoft have reported significant emissions linked to their AI operations, highlighting the technology’s role as a contributor to climate change.

Can AI help reduce its electricity consumption?

AI's energy consumption can be minimized by designing smaller, more task-specific AI models and enhancing data processing efficiency. Reducing the unnecessary use of data in AI systems can also significantly reduce energy consumption.

What is the impact of AI on climate change?

AI's significant energy consumption and reliance on non-renewable resources increase greenhouse gas emissions, contributing to climate change. However, AI also offers potential solutions for environmental management, such as optimizing energy use and supporting the development of smart grids.

What are the benefits of making AI environmentally sustainable?

Shifting AI operations to rely on sustainable practices can significantly reduce carbon emissions and mitigate the strain on water systems. Additionally, AI can be harnessed to create eco-friendly technologies and systems, demonstrating its potential to impact environmental health positively.

.webp)

%20Directive.webp)

.webp)

%20Arbor.avif)

%20Arbor.avif)

.avif)

%20Arbor%20Canada.avif)

.avif)

%20Arbor.avif)

.avif)

_.avif)

.avif)

%20Arbor.avif)

%20Software%20and%20Tools.avif)

.avif)

.avif)

%20EU%20Regulation.avif)

.avif)

%20Arbor.avif)

_%20_%20Carbon%20101.avif)

.avif)